Portkey is a 2-line upgrade to make your AG2 agents reliable, cost-efficient, and fast.

Portkey adds 4 core production capabilities to any AG2 agent:

Portkey is a 2-line upgrade to make your AG2 agents reliable, cost-efficient, and fast.

Portkey adds 4 core production capabilities to any AG2 agent:

- Routing to 200+ LLMs

- Making each LLM call more robust

- Full-stack tracing & cost, performance analytics

- Real-time guardrails to enforce behavior

Getting Started

- Install Required Packages:

-

Configure AG2 with Portkey:Generate your API key in the Portkey Dashboard.

- Let’s Run your Agent

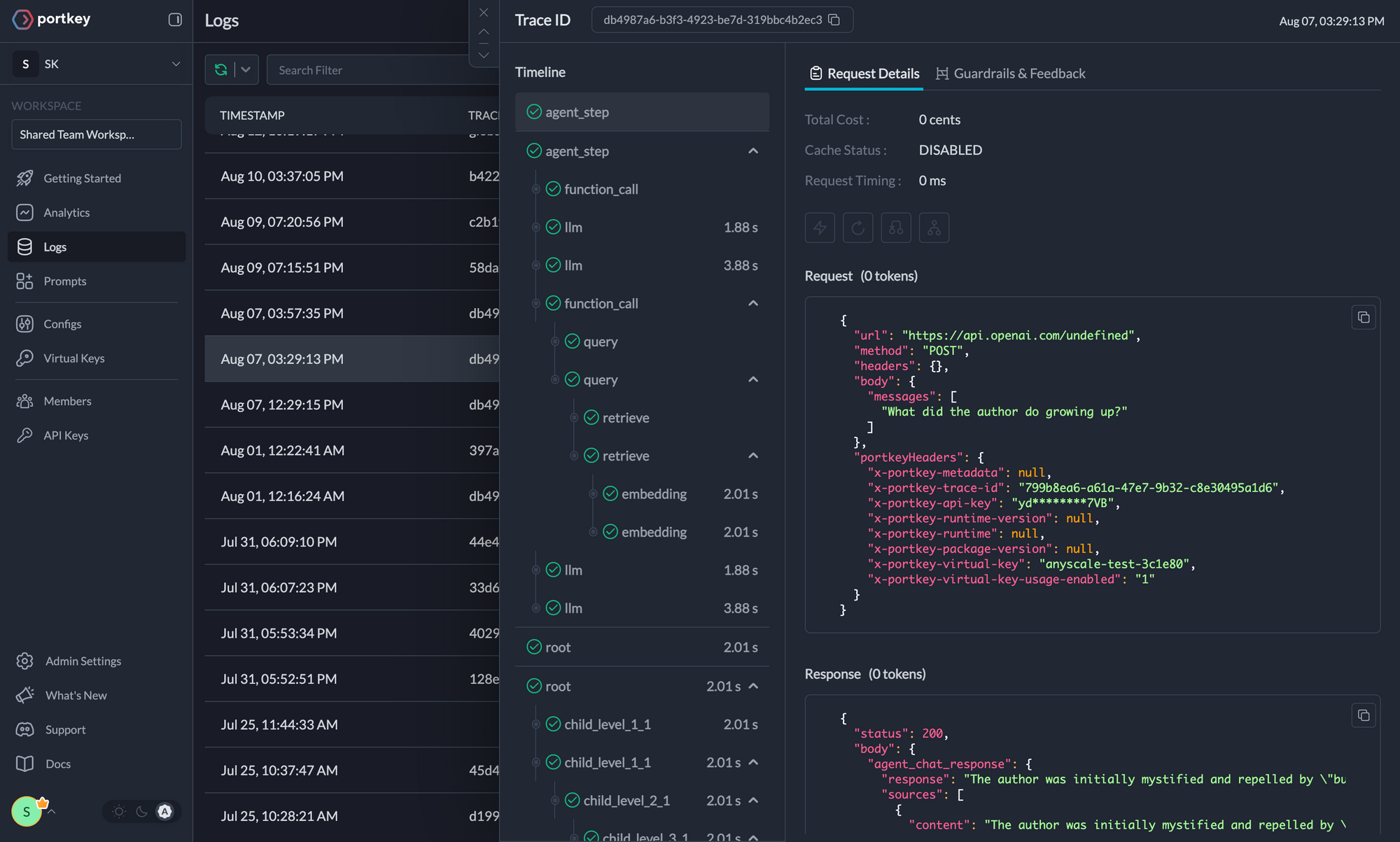

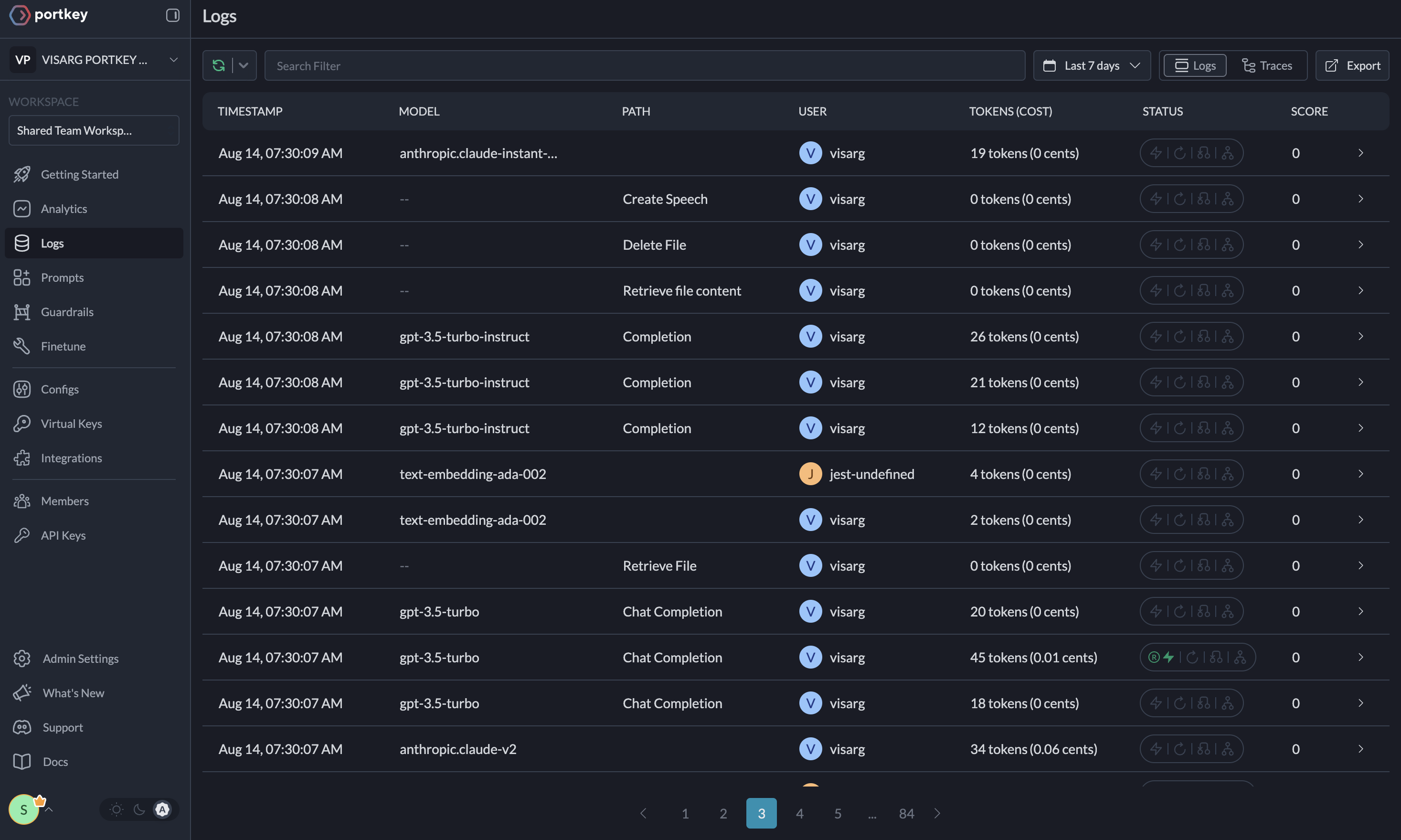

Here’s the output from your Agent’s run on Portkey’s dashboard

Key Features

Portkey offers a range of advanced features to enhance your AG2 agents. Here’s an overview| Feature | Description |

|---|---|

| 🌐 Multi-LLM Integration | Access 200+ LLMs with simple configuration changes |

| 🛡️ Enhanced Reliability | Implement fallbacks, load balancing, retries, and much more |

| 📊 Advanced Metrics | Track costs, tokens, latency, and 40+ custom metrics effortlessly |

| 🔍 Detailed Traces and Logs | Gain insights into every agent action and decision |

| 🚧 Guardrails | Enforce agent behavior with real-time checks on inputs and outputs |

| 🔄 Continuous Optimization | Capture user feedback for ongoing agent improvements |

| 💾 Smart Caching | Reduce costs and latency with built-in caching mechanisms |

| 🔐 Enterprise-Grade Security | Set budget limits and implement fine-grained access controls |

Colab Notebook

For a hands-on example of integrating Portkey with AG2, check out our notebookAdvanced Features

Interoperability

Easily switch between 200+ LLMs by changing theprovider and API key in your configuration.