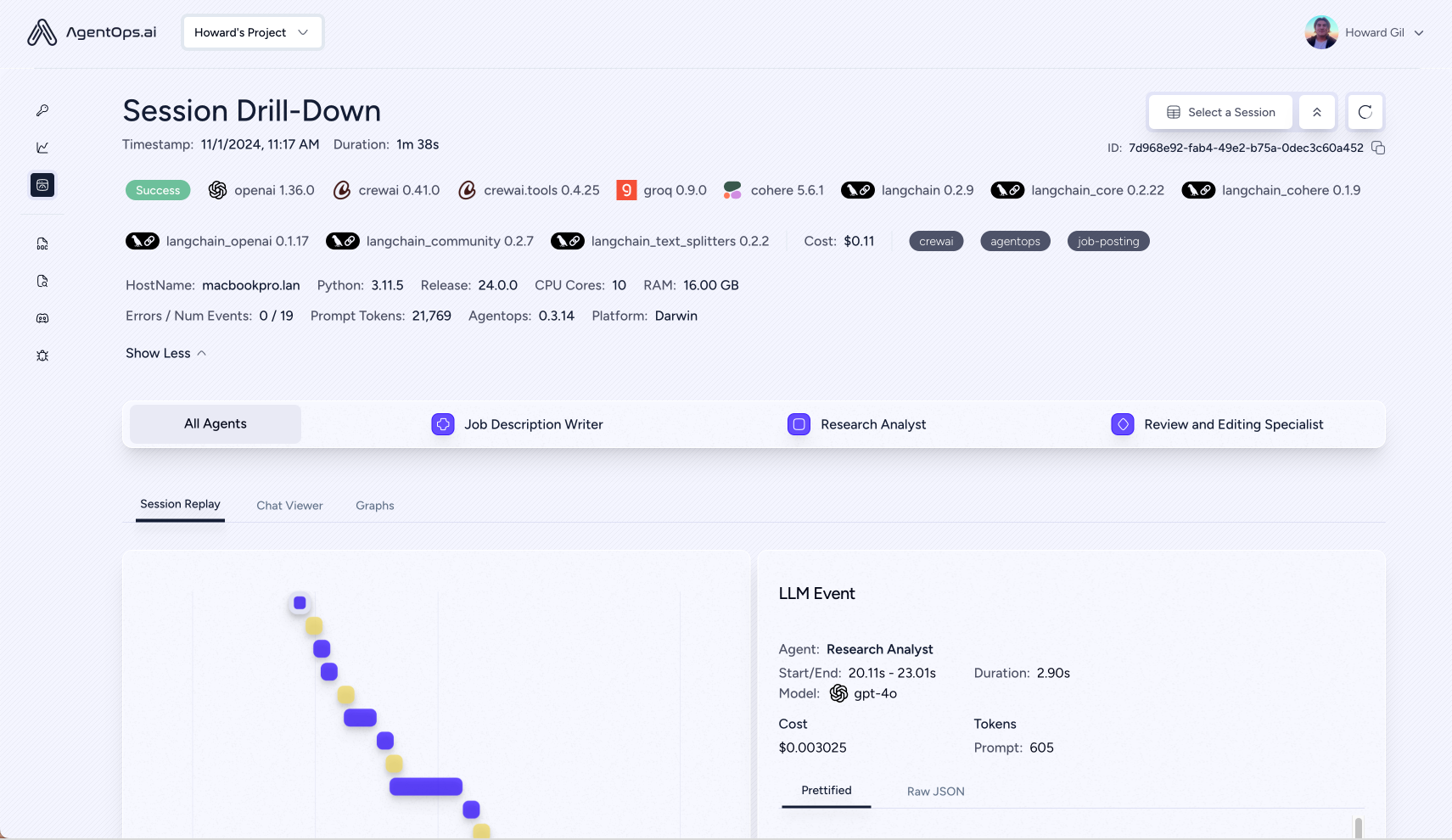

AgentOps provides session replays,

metrics, and monitoring for AI agents.

At a high level, AgentOps gives you the ability to monitor LLM calls,

costs, latency, agent failures, multi-agent interactions, tool usage,

session-wide statistics, and more. For more info, check out the

AgentOps Repo.

AgentOps provides session replays,

metrics, and monitoring for AI agents.

At a high level, AgentOps gives you the ability to monitor LLM calls,

costs, latency, agent failures, multi-agent interactions, tool usage,

session-wide statistics, and more. For more info, check out the

AgentOps Repo.

Overview Dashboard

Session Replays

Adding AgentOps to an existing Autogen service.

To get started, you’ll need to install the AgentOps package and set an API key. AgentOps automatically configures itself when it’s initialized meaning your agent run data will be tracked and logged to your AgentOps account right away.Some extra dependencies are needed for this notebook, which can be installed via pip:For more information, please refer to the installation guide.

Set an API key

By default, the AgentOpsinit() function will look for an environment

variable named AGENTOPS_API_KEY. Alternatively, you can pass one in as

an optional parameter.

Create an account and obtain an API key at

AgentOps.ai

Simple Chat Example

Tool Example

AgentOps also tracks when Autogen agents use tools. You can find more information on this example in tool-use.ipynbcalculator tool - Each call to OpenAI for LLM use